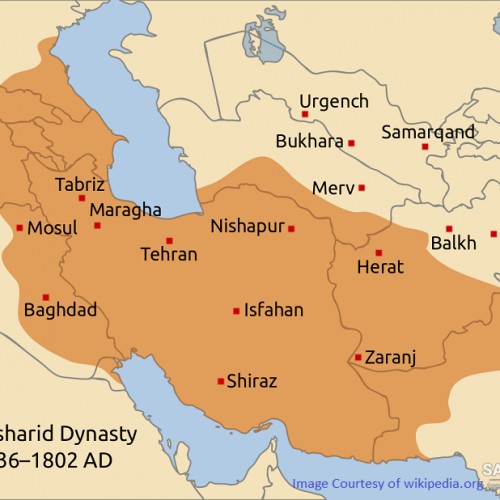

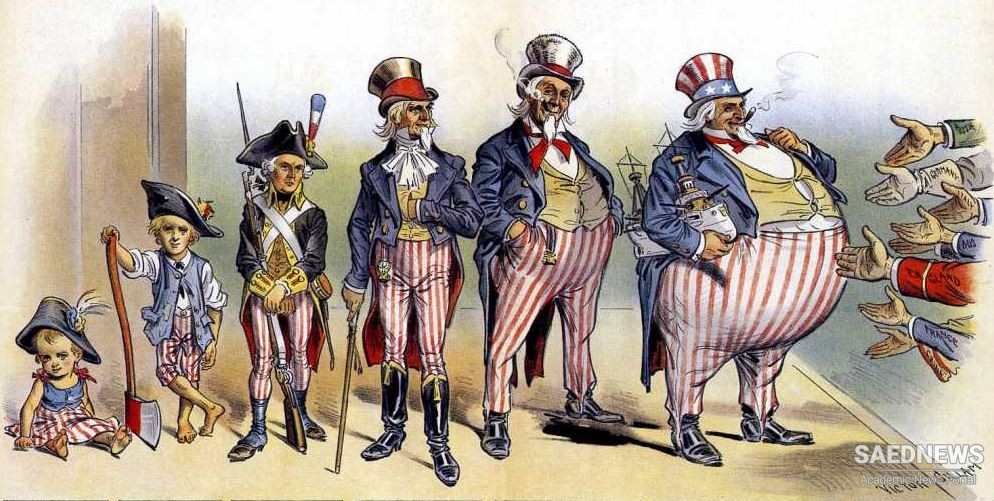

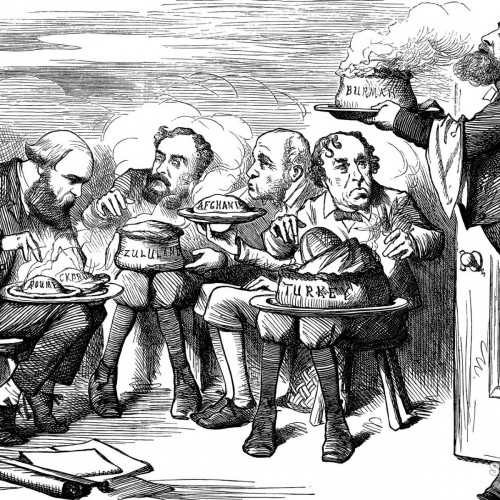

“American imperialism” is a term that refers to the economic, military, and cultural influence of the United States on other countries. First popularized during the presidency of James K. Polk, the concept of an “American Empire” was made a reality throughout the latter half of the 1800s. During this time, industrialization caused American businessmen to seek new international markets in which to sell their goods. In addition, the increasing influence of social Darwinism led to the belief that the United States was inherently responsible for bringing concepts such as industry, democracy, and Christianity to less developed “savage” societies. The combination of these attitudes and other factors led the United States toward imperialism. American imperialism is partly rooted in American exceptionalism, the idea that the United States is different from other countries due to its specific world mission to spread liberty and democracy. This theory often is traced back to the words of 1800s French observer Alexis de Tocqueville, who concluded that the United States was a unique nation, “proceeding along a path to which no limit can be perceived.”Pinpointing the actual beginning of American imperialism is difficult. Some historians suggest that it began with the writing of the Constitution; historian Donald W. Meinig argues that the imperial behavior of the United States dates back to at least the Louisiana Purchase. He describes this event as an, “aggressive encroachment of one people upon the territory of another, resulting in the subjugation of that people to alien rule.” Here, he is referring to the U.S. policies toward Native Americans, which he said were, “designed to remold them into a people more appropriately conformed to imperial desires.” (Source: Lumenlearning)

Imperialism: Greed, Power and Oppression

Imperialism: Greed, Power and Oppression